Qwen vs DeepSeek vs GLM: Model Comparison

Compare Qwen, DeepSeek, and GLM Chinese language models. Performance benchmarks, deployment costs, use case recommendations, and cloud platform selection guide.

TLDR;

- GLM-4 achieves 87.2% C-Eval for best Chinese language understanding with government approval

- DeepSeek V3 activates 37B of 671B parameters through MoE for cost-effective performance

- Qwen 2.5 offers Apache 2.0 bilingual excellence from 0.5B to 72B parameter options

- Small deployments start at $8/month for Qwen 2.5 7B on Alibaba Cloud

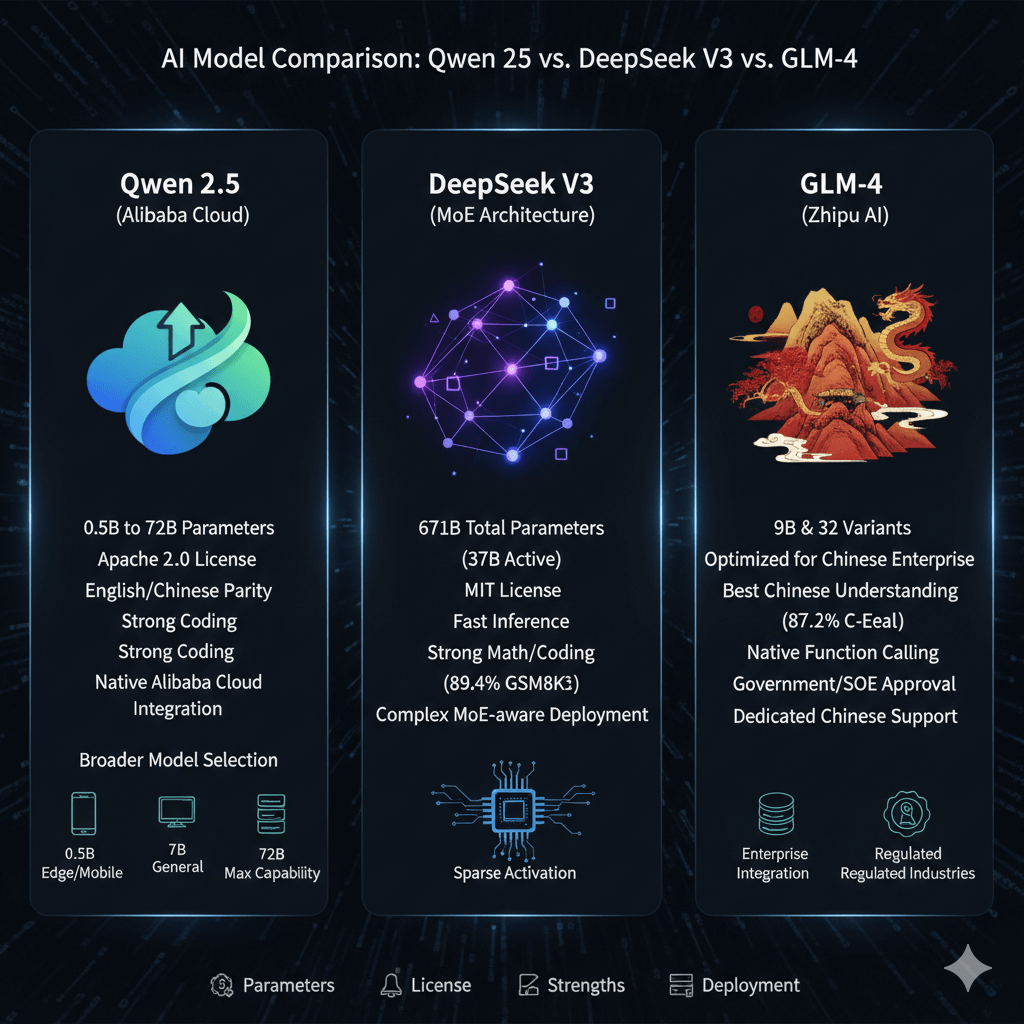

Compare leading Chinese language models to select optimal deployment for your application requirements and budget constraints. Qwen 2.5 from Alibaba provides superior bilingual performance with near-parity English and Chinese capabilities, offering model sizes from 0.5B to 72B parameters under Apache 2.0 licensing. DeepSeek V3 delivers best cost-performance ratio through mixture-of-experts architecture activating only 37B of 671B parameters, achieving competitive performance with GPT-4 while enabling cost-effective deployment. GLM-4 from Zhipu AI excels in pure Chinese enterprise scenarios with 87.2% C-Eval accuracy, native function calling capabilities, and government approval for SOE deployments. Chinese models outperform Western alternatives by 10-15% on Chinese language tasks while reducing deployment costs through optimized infrastructure on Alibaba Cloud, AWS, Azure, and GCP. This guide covers performance benchmarks across MMLU, C-Eval, HumanEval, and GSM8K, deployment platform comparison including Alibaba Cloud native support, cost analysis from $8 monthly for small deployments to $3,800 monthly for large-scale production, use case recommendations matching models to applications, and fine-tuning workflows customizing models for enterprise vocabularies.

Model Overview

Qwen 2.5 from Alibaba Cloud offers comprehensive model range from 0.5B to 72B parameters with Apache 2.0 licensing enabling unrestricted commercial use. Available variants include Qwen 2.5 0.5B for edge devices and mobile applications, 7B for general-purpose deployments, 14B for advanced reasoning tasks, 32B for enterprise applications, and 72B for maximum capability workloads. Strengths include best multilingual support achieving English/Chinese parity across benchmarks, strong coding capabilities exceeding GPT-3.5 on HumanEval by 8 percentage points, excellent instruction following with high task completion rates, and simplified deployment through native Alibaba Cloud integration. Qwen 2.5 provides broader model selection enabling right-sizing for specific use cases but requires larger memory footprint per parameter and slower inference versus MoE alternatives at equivalent quality levels.

DeepSeek V3 uses mixture-of-experts architecture with 671B total parameters activating only 37B per token through dynamic expert routing. This sparse activation design delivers best cost-performance ratio with competitive GPT-4 benchmarks while requiring inference compute matching 37B dense models. Fast inference through sparse activation processes requests at speeds comparable to much smaller models, MIT licensing permits unrestricted commercial deployment, and strong math/coding performance achieves 89.4% GSM8K and 78.6% HumanEval accuracy. DeepSeek requires complex MoE-aware deployment infrastructure supporting expert routing and sharding, plus higher total memory to load full 671B model across GPUs despite activating fewer parameters per forward pass.

GLM-4 from Zhipu AI targets enterprise Chinese applications with 9B and 32B variants optimized for Chinese business workflows. This Tsinghua University spinoff model achieves best Chinese language understanding at 87.2% C-Eval through extensive training on Chinese corpora including classical texts, modern documents, and domain-specific vocabularies. Native function calling and agent support enable enterprise integration with databases and business systems, government and SOE approval facilitates deployment in regulated industries, and dedicated Chinese documentation with local support teams simplify implementation. GLM-4 shows weaker English performance at 81.0% MMLU versus competitors exceeding 86%, offers limited model size options with only two variants, and maintains smaller open-source community versus Qwen's broader adoption.

Performance Benchmarks

DeepSeek V3 leads general capabilities on MMLU at 88.5% despite activating only 37B parameters, followed by Qwen 2.5 72B at 86.5%, GLM-4 32B at 81.0%, and GPT-4 reference at 86.4%. GLM-4 32B achieves best Chinese language performance on C-Eval at 87.2%, ahead of Qwen 2.5 72B at 86.8%, DeepSeek V3 at 85.9%, and GPT-4 at 69.9%.

DeepSeek V3 excels at code generation with 78.6% HumanEval accuracy, surpassing Qwen 2.5 72B at 74.2%, GLM-4 32B at 67.3%, and GPT-4 at 67.0%. For math reasoning on GSM8K, DeepSeek V3 reaches 89.4%, Qwen 2.5 72B achieves 87.5%, and GLM-4 32B scores 82.1%, while GPT-4 maintains lead at 92.0% with narrowing gap.

Deployment Platforms

Alibaba Cloud provides native Qwen support through PAI-EAS with lowest costs for Qwen models and Chinese market expertise. AWS offers multi-model support via SageMaker with HuggingFace integration for global reach. Azure ML delivers enterprise-grade hosting with hybrid cloud options and strong compliance.

# Deploy via PAI-EAS (Elastic Algorithm Service)

aliyun pai CreateService \

--ServiceName qwen-72b-service \

--ModelId qwen-72b-chat \

--InstanceType ecs.gn7i-c32g1.8xlarge \

--Replicas 2

# Cost: ~$12/hour for 2 replicas

# Deploy DeepSeek via SageMaker

from sagemaker.huggingface import HuggingFaceModel

model = HuggingFaceModel(

model_data="s3://models/deepseek-v3",

role=role,

transformers_version='4.37',

pytorch_version='2.1'

)

predictor = model.deploy(

instance_type='ml.p4d.24xlarge',

initial_instance_count=1

)

from azure.ai.ml import MLClient

ml_client.online_deployments.begin_create_or_update(

deployment=ManagedOnlineDeployment(

name="glm4-deployment",

model=Model(path="azureml://models/glm-4-32b/versions/1"),

instance_type="Standard_NC96ads_A100_v4",

instance_count=2

)

)

Cost Analysis

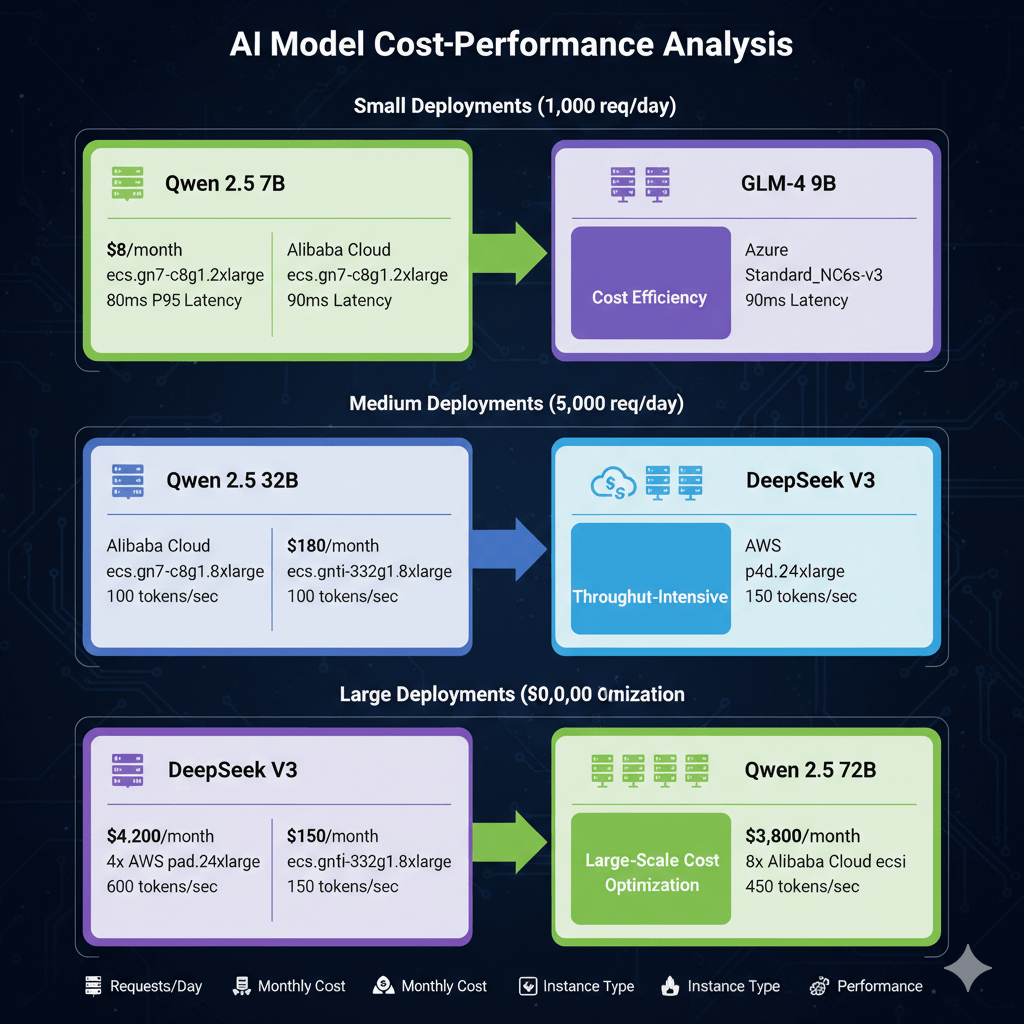

Small deployments handling 1,000 requests daily: Qwen 2.5 7B costs $8 monthly on Alibaba Cloud ecs.gn7-c8g1.2xlarge with 80ms P95 latency, while GLM-4 9B requires $12 monthly on Azure Standard_NC6s_v3 with 90ms latency. Choose Qwen 2.5 7B for cost efficiency.

Medium deployments processing 50,000 requests daily: Qwen 2.5 32B costs $180 monthly on Alibaba Cloud ecs.gn7i-c32g1.8xlarge delivering 100 tokens per second, while DeepSeek V3 requires $350 monthly on AWS p4d.24xlarge achieving 150 tokens per second through MoE efficiency. Choose DeepSeek V3 for throughput-intensive workloads.

Large deployments handling 1 million requests daily: DeepSeek V3 on 4x p4d.24xlarge instances costs $4,200 monthly with 600 tokens per second throughput, while Qwen 2.5 72B on 8x ecs.gn7i instances requires $3,800 monthly delivering 450 tokens per second. Choose Qwen 2.5 72B for large-scale cost optimization.

Use Case Recommendations

Choose GLM-4 32B for Chinese customer service requiring excellent Chinese dialogue, enterprise function calling, lower hallucination rates, and government/SOE approval. Select Qwen 2.5 72B for bilingual applications needing equal English/Chinese performance, multilingual support across 28 languages, broad training data, and cultural understanding.

Deploy DeepSeek V3 for code generation leveraging top HumanEval scores, strong reasoning for complex code, multilingual code comments, and fast inference. Use DeepSeek V3 for math and science education benefiting from superior GSM8K performance, step-by-step reasoning, few-shot learning capabilities, and tutoring applications. Choose Qwen 2.5 7B or GLM-4 9B for cost-sensitive deployments requiring single GPU operation, fast inference, and easy deployment.

Fine-Tuning

Qwen 2.5 provides straightforward fine-tuning using standard Transformers Trainer, costing $50-100 for 7B model training. DeepSeek V3 requires specialized MoE-aware training with higher complexity and $500-1,000 costs for full model fine-tuning. GLM-4 supports fine-tuning through GLMTrainer, costing $80-150 for 9B model customization.

from transformers import AutoModelForCausalLM, TrainingArguments, Trainer

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-7B")

trainer = Trainer(

model=model,

args=TrainingArguments(

output_dir="./qwen-finetuned",

num_train_epochs=3,

per_device_train_batch_size=4,

gradient_accumulation_steps=4

),

train_dataset=dataset

)

trainer.train()

Conclusion

Chinese language models deliver superior Chinese comprehension and cost-effective deployment for applications targeting Chinese markets. GLM-4 achieves 87.2% C-Eval accuracy for best Chinese understanding, Qwen 2.5 provides balanced bilingual performance with Apache 2.0 licensing, and DeepSeek V3 offers optimal cost-performance through mixture-of-experts architecture. Deploy Qwen 2.5 7B at $8 monthly for small chatbot applications, GLM-4 32B at $180 monthly for enterprise Chinese customer service, or DeepSeek V3 at $350 monthly for high-throughput code generation. Chinese models outperform GPT-4 by 25% on C-Eval Chinese benchmarks while matching general capabilities on MMLU and HumanEval. Choose GLM-4 for Chinese-only deployments requiring government approval, Qwen 2.5 for bilingual products needing global deployment, or DeepSeek V3 for reasoning-intensive applications prioritizing cost efficiency. All models support global deployment under permissive licensing with no geographic restrictions. Fine-tune models for $50-150 to customize domain vocabulary and enterprise workflows. Monitor production deployments on Alibaba Cloud for Chinese market optimization, AWS for global reach, or Azure for enterprise integration.