44% Better Price-Performance with Oracle Cloud LLMs

Oracle Cloud Infrastructure delivers up to 44% better price-performance for LLMs, with H100 GPUs costing 60–70% less than AWS or Azure. Integrated databases and built-in MLOps enable faster, simpler, and more cost-efficient enterprise AI deployments.

TLDR;

- H100 instances cost 60-70% less than equivalent AWS P5 or Azure ND offerings

- Universal Credits simplify budgeting with predictable costs across all OCI services

- Run ML algorithms directly in Autonomous Database without data movement

- 10TB free monthly egress versus AWS at $0.09/GB saves thousands for global deployments

Deploy LLMs on Oracle Cloud Infrastructure with superior price-performance and simplified pricing. OCI delivers up to 44% better price-performance for AI workloads with H100 GPUs, Autonomous Database integration, and predictable Universal Credits costs for enterprise ML deployments.

Why Oracle Cloud ML Makes Financial Sense

Oracle Cloud delivers up to 44% better price-performance for AI workloads compared to major competitors. That's not marketing. It's measurable.

GPU shapes cost significantly less. An H100 instance on OCI costs less than equivalent AWS P5 or Azure ND instances. No complicated pricing tiers. No hidden fees. Simple, predictable monthly costs.

The platform integrates deeply with Oracle Database. Your ML models query production data directly. No data movement. No ETL pipelines. No duplicate storage costs.

OCI Data Science platform provides end-to-end MLOps. Jupyter notebooks. Automated pipelines. Model deployment. Monitoring. All included without per-feature pricing.

For enterprises with existing Oracle investments, OCI ML is obvious. Existing support contracts cover ML workloads. Single vendor relationship simplifies procurement. Technical teams leverage existing Oracle expertise.

OCI ML Platform Overview

Oracle structures ML services around data proximity and simplicity.

OCI Data Science Platform

The Data Science platform handles your complete ML lifecycle.

Managed notebooks provide pre-configured JupyterLab environments. Popular frameworks pre-installed: TensorFlow, PyTorch, scikit-learn, XGBoost. GPU acceleration available with one click.

Model catalog stores trained models with versioning. Track experiments. Compare performance. Deploy production models with approval workflows.

Model deployment creates HTTP endpoints automatically. Auto-scaling based on load. Health checks and monitoring included. Deploy from catalog in minutes.

Pipeline creation automates ML workflows. Define data prep, training, evaluation, and deployment steps. Schedule recurring training. Retrain on schedule or triggers.

Integration with OCI services works seamlessly. Object Storage for datasets. Autonomous Database for features. Vault for secrets. Logging for monitoring. Everything connects natively.

from oracle_ads import ModelDeployment

deployment = ModelDeployment(

model_id="ocid1.model.oc1...",

instance_shape="VM.GPU.A10.1",

instance_count=2,

bandwidth_mbps=10,

logging_enabled=True

)

deployment.create()

GPU Compute Shapes

OCI offers compelling GPU options for LLM deployment.

BM.GPU.H100.8 provides 8 NVIDIA H100 GPUs in a bare metal instance. 640GB total GPU memory. 2TB system RAM. No virtualization overhead. Maximum performance.

VM.GPU.A10.1 offers single A10 GPU with 24GB VRAM. Perfect for smaller models (7B-13B parameters). Cost-effective for development and moderate workloads.

VM.GPU4.8 delivers 8 A100 40GB GPUs. Handles models up to 200B parameters. Good balance of performance and cost.

All GPU instances include NVMe local storage. No extra charges. Network bandwidth included based on shape. Predictable pricing simplifies budgeting.

Container Engine for Kubernetes

OKE (Oracle Kubernetes Engine) runs containerized ML workloads.

Managed control plane costs nothing. You pay only for worker nodes. No cluster management fees.

GPU node pools configure automatically. NVIDIA drivers pre-installed. CUDA libraries ready. Deploy GPU workloads immediately.

Integration with OCI Container Registry stores your images. Vulnerability scanning included. Image signing for supply chain security.

apiVersion: v1

kind: NodePool

metadata:

name: gpu-inference

spec:

shape: VM.GPU.A10.1

size: 3

image: Oracle-Linux-7.9-Gen2-GPU-2024.01

nsgIds:

- ocid1.networksecuritygroup.oc1...

subnets:

- ocid1.subnet.oc1...

Database-Integrated ML Workflows

Oracle's unique strength: ML works directly with production databases.

Autonomous Database for ML

Autonomous Database includes machine learning capabilities built-in.

Oracle Machine Learning runs algorithms inside the database. Your data never leaves. Extreme performance. Zero data movement costs.

Build models with SQL or Python. Algorithms optimized for Oracle Database. Leverage database parallelism automatically.

BEGIN

DBMS_DATA_MINING.CREATE_MODEL(

model_name => 'FRAUD_DETECTION_MODEL',

mining_function => DBMS_DATA_MINING.CLASSIFICATION,

data_table_name => 'TRANSACTIONS',

case_id_column_name => 'TRANSACTION_ID',

target_column_name => 'IS_FRAUD',

settings_table_name => 'MODEL_SETTINGS'

);

END;

AutoML selects algorithms automatically. Tunes hyperparameters. Evaluates performance. Picks the best model. No manual experimentation required.

SQL integration lets analysts work with models using familiar tools. No Python required. Query predictions like any other table.

SELECT customer_id, transaction_id,

PREDICTION(FRAUD_DETECTION_MODEL USING *) as fraud_prediction,

PREDICTION_PROBABILITY(FRAUD_DETECTION_MODEL USING *) as confidence

FROM new_transactions;

Connecting External Models to Database

Deploy external LLMs (PyTorch, TensorFlow) and query from database.

OCI Data Science endpoints expose HTTP APIs. Database calls these APIs through UTL_HTTP. Your SQL queries can invoke GPT-3, Llama, or custom models.

This enables powerful hybrid approaches. Structured data processing in database. Unstructured text processing in LLM. Combined results in single query.

SELECT customer_id,

review_text,

ml_endpoint_predict(

'https://modeldeployment.eu-frankfurt-1.oci.oraclecloud.com/ocid1.model...',

JSON_OBJECT('text' value review_text)

) as sentiment

FROM product_reviews;

Cost Optimization Strategies

OCI pricing favors enterprise workloads.

Universal Credits and Predictable Pricing

Oracle uses Universal Credits. Buy a commitment. Apply credits to any service. GPU instances, storage, database - all from same pool.

No separate Reserved Instance markets. No complex discount programs. Simple credit purchases with volume discounts.

Annual Flex provides credits for one year. Use across any OCI service. Typically 33% discount versus pay-as-you-go.

Monthly Flex commits to monthly spend. More flexibility. Slightly lower discount (around 25%).

Pay as you go charges monthly for actual usage. No commitment. Highest unit cost. Good for experimentation.

GPU Cost Comparison

Real pricing (approximate, verify current rates):

H100 8-GPU instance (BM.GPU.H100.8)

- OCI: $32/hour (~$23,040/month)

- AWS P5.48xlarge: $98/hour (~$70,560/month)

- Azure ND96isr_H100_v5: $90/hour (~$64,800/month)

Savings: 60-70% versus AWS/Azure for equivalent H100 capacity.

A10 single GPU (VM.GPU.A10.1)

- OCI: $1.275/hour (~$917/month)

- AWS G5.xlarge: $1.006/hour (~$724/month)

- GCP A2: $1.35/hour (~$972/month)

Competitive with AWS. Lower than GCP. Simpler billing structure than both.

Network Egress Optimization

OCI charges 10TB of egress free per month. After that, $0.0085 per GB.

Compare to AWS: First 1GB free, then $0.09 per GB (10x higher).

For ML models serving predictions globally, this saves thousands monthly. A model serving 1M predictions daily at 1KB each generates 30GB egress daily = 900GB monthly. On AWS: $80.91/month. On OCI: $0 (under 10TB threshold).

Security and Compliance

Enterprise security built into OCI foundation.

Identity and Access Management

IAM policies define granular permissions. Support for dynamic groups enables compute instances to act without storing credentials.

Allow group data-scientists to use data-science-family in compartment ml-development

Allow group ml-engineers to manage model-deployments in compartment production

Federation with enterprise identity providers. SAML 2.0 and OAuth 2.0 support. Integrate with Active Directory, Okta, or Azure AD.

MFA enforces strong authentication. Hardware tokens or mobile app TOTP. Required for privileged operations.

Network Isolation

Virtual Cloud Networks (VCNs) isolate workloads. Private subnets prevent internet access. Bastion hosts control administrative access.

Security Lists and Network Security Groups act as firewalls. Define allowed traffic explicitly. Deny everything else by default.

Service Gateway enables private access to OCI services. Traffic to Object Storage, Autonomous Database stays within OCI network. Never traverses internet.

FastConnect provides dedicated network connection from on-premises to OCI. Bypasses public internet entirely. Meets compliance requirements for regulated data.

Data Encryption

All data encrypts at rest by default. OCI-managed keys work automatically. Zero configuration required.

Customer-managed keys via OCI Vault provide complete control. You create and rotate encryption keys. OCI uses your keys for encryption. You can revoke access anytime.

Hardware Security Modules (HSMs) store sensitive keys. FIPS 140-2 Level 3 certified. Meets strictest compliance requirements.

Monitoring and Management

OCI provides comprehensive monitoring without extra charges.

Monitoring Service

Automatically collects metrics from all OCI resources. GPU utilization. Memory usage. Network throughput. No agent installation required.

Create alarms on any metric. Trigger notifications via email, PagerDuty, Slack. Execute Functions for automated remediation.

Custom metrics via API enable application-specific monitoring. Track model accuracy. Log prediction latency. Alert on business KPIs.

from oci.monitoring import MonitoringClient

monitoring_client = MonitoringClient(config)

metric_data = {

"namespace": "ml_models",

"compartmentId": "ocid1.compartment...",

"name": "prediction_latency_ms",

"dimensions": {"model": "fraud_detection", "version": "v2"},

"datapoints": [{

"timestamp": datetime.now(),

"value": 45.2

}]

}

monitoring_client.post_metric_data(post_metric_data_details=metric_data)

Logging Service

Centralized logging aggregates logs from all services. VCN flow logs. Audit logs. Application logs. All searchable in one place.

Log queries use simple syntax. Filter by time range, resource, severity. Export results to Object Storage for long-term retention.

Integration with SIEM systems via API or streaming. Send security events to Splunk, LogRhythm, or custom tools.

Getting Started: First Deployment

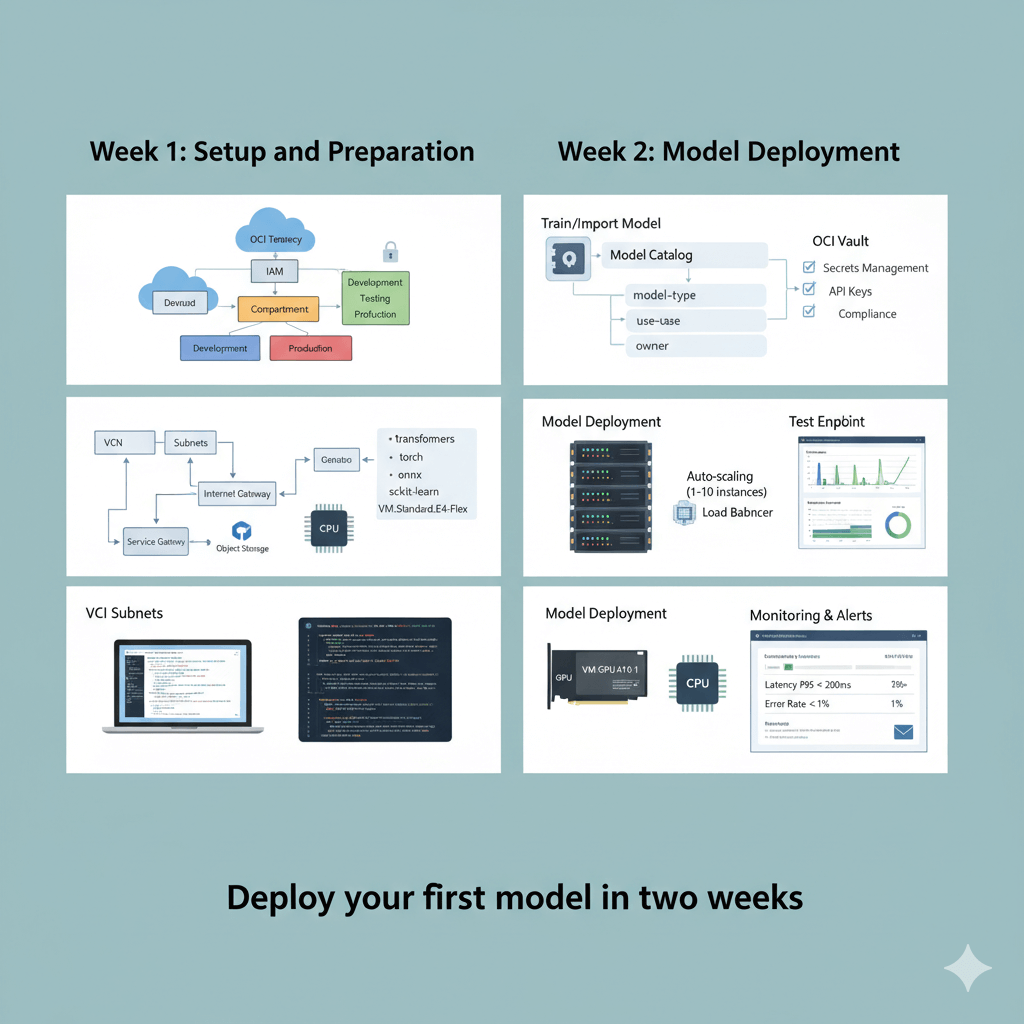

Deploy your first model in two weeks.

Week 1: Setup and Preparation

Create OCI tenancy or use existing account. Set up identity and access management with appropriate user groups. Create compartments for isolation: development, testing, production. This separation enables different security policies and cost tracking per environment.

Set up VCN with private subnets for ML workloads. Configure internet gateway for necessary external access. Create security lists allowing only required traffic. Set up service gateway for private access to Object Storage and other OCI services without internet exposure.

Configure OCI Vault for secrets management. Store API keys, database credentials, and other sensitive information securely. Enable audit logging to track all access and changes for compliance requirements.

Launch Data Science notebook session with appropriate compute shape. Choose GPU shape (VM.GPU.A10.1) if training models locally. CPU shape (VM.Standard.E4.Flex) sufficient for deployment activities and inference testing. Install required libraries: transformers, torch, onnx, scikit-learn.

Week 2: Model Deployment

Train or import your model using supported formats. OCI Data Science accepts ONNX, PyTorch, TensorFlow SavedModel, and scikit-learn pickle formats. For Hugging Face models, export to ONNX for optimal inference performance.

Register model in Model Catalog with comprehensive metadata. Include training date, accuracy metrics, F1 scores, input schema, output format, and model version. Add tags for searchability: model-type, use-case, owner. This metadata helps teams discover and reuse models.

Create model deployment selecting instance shape based on model size and latency requirements. For 7B parameter models, VM.GPU.A10.1 provides good balance. Enable auto-scaling with 1-10 instances initially. Configure load balancer for traffic distribution across instances.

Test endpoint thoroughly with sample data representing production scenarios. Measure P50, P95, P99 latency percentiles. Verify prediction accuracy against validation dataset. Adjust instance shape if latency exceeds requirements or GPU utilization stays below 60%.

Configure comprehensive monitoring and alerts. Set thresholds: latency P95 < 200ms, error rate < 1%, throughput > expected requests per second. Create alarms sending notifications to operations team via email or PagerDuty.

Document complete deployment for team knowledge sharing. Include endpoint URL, authentication method (API key or instance principal), request/response format with examples, expected response times, troubleshooting steps, and escalation procedures.

Maximizing Value with Oracle Cloud ML

Oracle Cloud Infrastructure delivers compelling advantages for enterprise LLM deployments, while also offering scalable cloud solutions for startups looking to balance performance with cost efficiency. Price-performance leadership reaches 44% better than major competitors with H100 GPU instances costing 60–70% less than equivalent AWS or Azure offerings. Universal Credits simplify budgeting with predictable monthly costs.

Autonomous Database integration provides unique capabilities. Run ML algorithms directly in the database without data movement. Query production data alongside model predictions in single SQL statements. This architecture reduces complexity and eliminates data synchronization challenges.

OCI Data Science platform covers the complete MLOps lifecycle with managed notebooks, automated pipelines, and model deployment. Enterprise security includes 90+ compliance certifications with data residency controls meeting GDPR and HIPAA requirements.

Start with existing Oracle Database investments to maximize value. Deploy models using familiar Oracle tools and processes. Scale infrastructure based on actual workload demands using auto-scaling and monitoring capabilities.

Frequently Asked Questions

How does OCI ML compare to AWS SageMaker in terms of features?

OCI Data Science provides core MLOps functionality: notebooks, automated pipelines, model deployment, monitoring. It matches SageMaker for most enterprise use cases.

SageMaker offers more specialized features: SageMaker Clarify for bias detection, SageMaker Neo for edge optimization, broader algorithm marketplace.

OCI provides superior database integration. Direct ML in Autonomous Database. Native JSON, graph, and spatial data support. Simpler architecture for database-centric applications.

Choose OCI if you value cost savings (30-60% typical), Oracle database integration, or predictable pricing. Choose SageMaker if you need cutting-edge features or massive ecosystem.

Can I deploy large language models (70B+ parameters) on OCI?

Yes. Use BM.GPU.H100.8 instances with 640GB total GPU memory. This handles models up to 200B parameters comfortably.

For models exceeding single-instance capacity, deploy across multiple instances. Implement tensor parallelism using DeepSpeed or Megatron-LM. OCI's RDMA networking provides low-latency inter-instance communication.

4-bit quantization (GPTQ, AWQ) reduces memory requirements by 75%. A 70B model needing 140GB full precision requires only 35GB quantized. Fits easily on single VM.GPU4.8 instance.

What's the migration path from AWS or Azure to OCI?

Export models from SageMaker or Azure ML. Models in standard formats (ONNX, PyTorch, TensorFlow SavedModel) import directly to OCI.

Data migration uses OCI Data Transfer Service. Ship drives to Oracle for large datasets (petabytes). Use network transfer for smaller data (terabytes).

Applications calling model endpoints require minimal changes. Update endpoint URLs. Adjust authentication headers for OCI signatures. Logic remains identical.

Budget 2-4 weeks for typical migration. One week for infrastructure setup. One week for model deployment and testing. Additional time for pipeline recreation and validation.

Does OCI support auto-scaling for model deployments?

Yes. Model deployments configure minimum and maximum instance counts. OCI scales automatically based on request load.

Scaling triggers on CPU utilization, memory usage, or request count. Define target thresholds. OCI maintains targets automatically.

Scale-up happens within 2-3 minutes. New instances start, warm up, then join load balancer. Scale-down waits for cooldown period (default 5 minutes) before removing instances.

For traffic spikes, set minimum instances to handle baseline load. Maximum instances accommodate peaks. This prevents cold-start latency while controlling maximum cost.

How does OCI handle regulatory compliance for ML workloads?

OCI meets major compliance frameworks: SOC 1/2/3, ISO 27001, HIPAA, PCI DSS, GDPR, FedRAMP. Full list at oracle.com/cloud/compliance.

Data residency controls keep data in specified regions. Configure replication policies. Prevent cross-border data transfer.

Audit logs track all actions. Who accessed which data when. Tamper-proof logging meets regulatory retention requirements.

Oracle can provide BAA for HIPAA workloads. Data Processing Addendum for GDPR. Specialized compliance teams assist with regulated industry deployments.