Deploy Qwen 2.5 with SageMaker Auto-Scaling

Deploy Qwen 2.5 on SageMaker with auto-scaling and cost optimization. This guide shows you step-by-step deployment for production workloads handling thousands of requests daily.

TLDR;

- Deploy Qwen 2.5 on SageMaker in under 30 minutes with auto-scaling for variable traffic

- 4-bit quantization reduces memory by 75% and doubles throughput with minimal quality loss

- Serverless inference costs $6-8/day for 1000 requests versus $36/day always-on

- 3-year reserved capacity saves 64% on ml.g5.2xlarge instances ($8,400/year per instance)

Introduction

Qwen 2.5 delivers strong multilingual performance, especially for Chinese and English text. Open source licensing allows commercial use without restrictions. When combined with SageMaker's managed infrastructure, you get automatic scaling, built-in monitoring, and simplified operations. This guide walks through the complete deployment process, from model preparation to production optimization. You'll learn how to configure auto-scaling for variable traffic, apply quantization to reduce costs by 75%, and monitor endpoint performance with CloudWatch. Whether you're building multilingual chatbots, document analysis systems, or content generation tools for Asian markets, this tutorial provides production-ready code and proven strategies for deploying Qwen 2.5 at scale. Expect deployment in under 30 minutes with immediate cost savings through serverless options or reserved capacity.

Why Qwen 2.5 on SageMaker

SageMaker handles infrastructure automatically. No server management. Auto-scaling based on load. Monitoring built-in. You focus on your application, not operations.

The combination works well for:

- Multilingual customer support chatbots

- Document analysis (Chinese/English)

- Content generation for Asian markets

- Translation services

- Code generation with multilingual comments

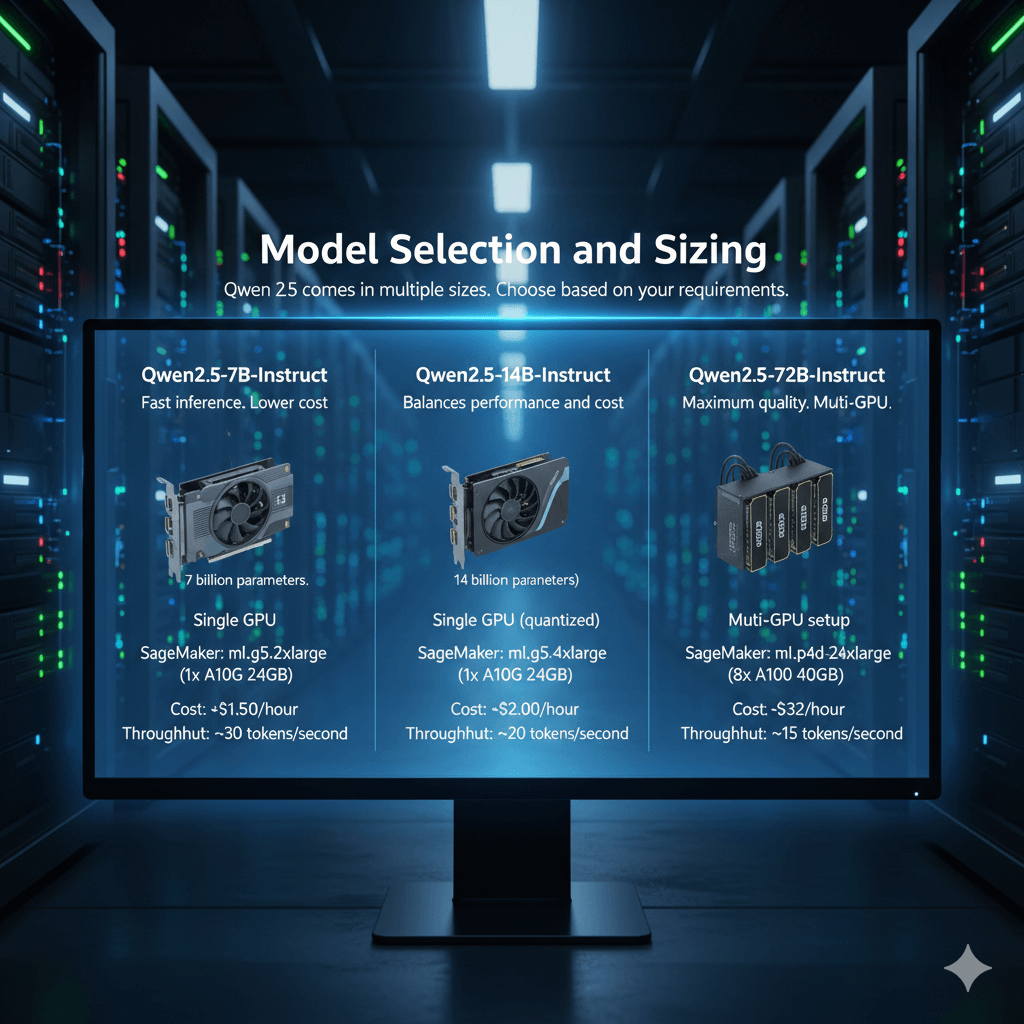

Model Selection and Sizing

Qwen 2.5 comes in multiple sizes. Choose based on your requirements.

Qwen2.5-7B-Instruct suits most applications. 7 billion parameters. Fits on single GPU. Fast inference. Lower cost.

- SageMaker instance: ml.g5.2xlarge (1x A10G 24GB)

- Cost: ~$1.50/hour

- Throughput: ~30 tokens/second

Qwen2.5-14B-Instruct balances performance and cost. Better quality than 7B. Still runs on single GPU with quantization.

- SageMaker instance: ml.g5.4xlarge (1x A10G 24GB)

- Cost: ~$2.00/hour

- Throughput: ~20 tokens/second

Qwen2.5-72B-Instruct delivers maximum quality. Requires multi-GPU setup. Higher cost justified for critical applications.

- SageMaker instance: ml.p4d.24xlarge (8x A100 40GB)

- Cost: ~$32/hour

- Throughput: ~15 tokens/second

Deployment Steps

Step 1: Prepare Model Artifacts

Download and package Qwen 2.5 for SageMaker.

import torch

# Load model

model_id = "Qwen/Qwen2.5-7B-Instruct"

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.float16,

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_id)

# Save to local directory

model.save_pretrained("./qwen-model")

tokenizer.save_pretrained("./qwen-model")

Create inference script (inference.py):

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

def model_fn(model_dir):

"""Load model from saved artifacts"""

model = AutoModelForCausalLM.from_pretrained(

model_dir,

torch_dtype=torch.float16,

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_dir)

return {'model': model, 'tokenizer': tokenizer}

def predict_fn(data, model_dict):

"""Run inference"""

model = model_dict['model']

tokenizer = model_dict['tokenizer']

prompt = data.pop('inputs', data)

max_length = data.pop('max_length', 512)

temperature = data.pop('temperature', 0.7)

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

with torch.no_grad():

outputs = model.generate(

**inputs,

max_length=max_length,

temperature=temperature,

do_sample=True

)

return tokenizer.decode(outputs[0], skip_special_tokens=True)

def input_fn(request_body, content_type):

"""Parse input"""

if content_type == 'application/json':

return json.loads(request_body)

raise ValueError(f"Unsupported content type: {content_type}")

def output_fn(prediction, accept):

"""Format output"""

if accept == 'application/json':

return json.dumps({'generated_text': prediction}), accept

raise ValueError(f"Unsupported accept type: {accept}")

Package for SageMaker:

tar -czf model.tar.gz -C qwen-model .

# Upload to S3

aws s3 cp model.tar.gz s3://your-bucket/qwen-model/model.tar.gz

aws s3 cp inference.py s3://your-bucket/qwen-model/code/inference.py

Step 2: Create SageMaker Model

import sagemaker

from sagemaker.huggingface import HuggingFaceModel

role = sagemaker.get_execution_role()

sess = sagemaker.Session()

# Create HuggingFace Model

huggingface_model = HuggingFaceModel(

model_data=f"s3://your-bucket/qwen-model/model.tar.gz",

role=role,

transformers_version='4.37',

pytorch_version='2.1',

py_version='py310',

entry_point='inference.py',

source_dir='s3://your-bucket/qwen-model/code/'

)

Step 3: Deploy Endpoint

predictor = huggingface_model.deploy(

initial_instance_count=1,

instance_type='ml.g5.2xlarge',

endpoint_name='qwen-25-7b-endpoint',

# Auto-scaling configuration

serverless_inference_config=None # Use instance-based

)

Step 4: Configure Auto-Scaling

client = boto3.client('application-autoscaling')

# Register scalable target

response = client.register_scalable_target(

ServiceNamespace='sagemaker',

ResourceId=f'endpoint/qwen-25-7b-endpoint/variant/AllTraffic',

ScalableDimension='sagemaker:variant:DesiredInstanceCount',

MinCapacity=1,

MaxCapacity=10

)

# Create scaling policy

response = client.put_scaling_policy(

PolicyName='qwen-scaling-policy',

ServiceNamespace='sagemaker',

ResourceId=f'endpoint/qwen-25-7b-endpoint/variant/AllTraffic',

ScalableDimension='sagemaker:variant:DesiredInstanceCount',

PolicyType='TargetTrackingScaling',

TargetTrackingScalingPolicyConfiguration={

'TargetValue': 70.0, # Target 70% invocations per instance

'PredefinedMetricSpecification': {

'PredefinedMetricType': 'SageMakerVariantInvocationsPerInstance'

},

'ScaleInCooldown': 300, # 5 minutes

'ScaleOutCooldown': 60 # 1 minute

}

)

Testing the Endpoint

Send test requests:

response = predictor.predict({

"inputs": "Translate to Chinese: Hello, how are you?",

"max_length": 100,

"temperature": 0.7

})

print(response)

Load test with concurrent requests:

import time

def send_request(prompt):

start = time.time()

response = predictor.predict({"inputs": prompt})

latency = time.time() - start

return latency

prompts = [

"Explain quantum computing",

"Write a Python function for sorting",

"Translate: Good morning"

] * 100

with concurrent.futures.ThreadPoolExecutor(max_workers=10) as executor:

latencies = list(executor.map(send_request, prompts))

print(f"Average latency: {sum(latencies)/len(latencies):.2f}s")

print(f"P95 latency: {sorted(latencies)[int(len(latencies)*0.95)]:.2f}s")

Cost Optimization

Reduce SageMaker costs significantly.

Use Serverless Inference for Low Traffic

For applications under 100 requests/hour, serverless costs less:

serverless_config = ServerlessInferenceConfig(

memory_size_in_mb=4096,

max_concurrency=10

)

predictor = huggingface_model.deploy(

serverless_inference_config=serverless_config,

endpoint_name='qwen-serverless'

)

Pricing:

- $0.20 per request

- $0.0003 per GB-second compute

For 1000 requests/day: ~$6-8/day vs $36/day for always-on ml.g5.2xlarge.

Apply Model Quantization

Reduce memory and increase throughput with quantization:

# 4-bit quantization config

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type="nf4"

)

model = AutoModelForCausalLM.from_pretrained(

"Qwen/Qwen2.5-7B-Instruct",

quantization_config=bnb_config,

device_map="auto"

)

Benefits:

- 75% memory reduction (7B model: 28GB → 7GB)

- 2x throughput increase

- Minimal quality degradation (<2% typical)

- Smaller instance types possible

Use Reserved Capacity

For steady production traffic, reserve capacity:

client = boto3.client('sagemaker')

response = client.create_endpoint_config(

EndpointConfigName='qwen-reserved-config',

ProductionVariants=[{

'VariantName': 'AllTraffic',

'ModelName': 'qwen-model',

'InstanceType': 'ml.g5.2xlarge',

'InitialInstanceCount': 2,

'CapacityType': 'RESERVED' # Use reserved capacity

}]

)

Savings:

- 1-year: 42% discount

- 3-year: 64% discount

Example: ml.g5.2xlarge costs $1.50/hour on-demand. With 3-year reservation: $0.54/hour. Savings: $8,400/year for single instance.

Monitoring and Debugging

Track endpoint performance.

CloudWatch Metrics

Key metrics to monitor:

from datetime import datetime, timedelta

cloudwatch = boto3.client('cloudwatch')

# Get invocation metrics

response = cloudwatch.get_metric_statistics(

Namespace='AWS/SageMaker',

MetricName='ModelLatency',

Dimensions=[

{'Name': 'EndpointName', 'Value': 'qwen-25-7b-endpoint'},

{'Name': 'VariantName', 'Value': 'AllTraffic'}

],

StartTime=datetime.now() - timedelta(hours=1),

EndTime=datetime.now(),

Period=300, # 5 minutes

Statistics=['Average', 'Maximum', 'p95']

)

print(response['Datapoints'])

Create alarms:

cloudwatch.put_metric_alarm(

AlarmName='qwen-high-latency',

ComparisonOperator='GreaterThanThreshold',

EvaluationPeriods=2,

MetricName='ModelLatency',

Namespace='AWS/SageMaker',

Period=300,

Statistic='Average',

Threshold=2000.0, # 2 seconds

ActionsEnabled=True,

AlarmActions=['arn:aws:sns:region:account:topic'],

Dimensions=[

{'Name': 'EndpointName', 'Value': 'qwen-25-7b-endpoint'}

]

)

Enable Model Monitor

Detect data drift:

# Enable data capture

data_capture_config = DataCaptureConfig(

enable_capture=True,

sampling_percentage=100,

destination_s3_uri=f's3://your-bucket/qwen-monitoring'

)

# Create monitor

model_monitor = DefaultModelMonitor(

role=role,

instance_count=1,

instance_type='ml.m5.xlarge',

max_runtime_in_seconds=3600

)

# Schedule monitoring

model_monitor.create_monitoring_schedule(

monitor_schedule_name='qwen-monitor',

endpoint_input=predictor.endpoint_name,

statistics=baseline_statistics,

constraints=baseline_constraints,

schedule_cron_expression='cron(0 * * * ? *)' # Hourly

)

Conclusion

Deploying Qwen 2.5 on SageMaker provides a production-ready platform for multilingual LLM applications. Auto-scaling handles variable traffic automatically, while quantization and serverless options reduce costs by up to 75%. Reserved instances deliver additional savings for steady workloads. The combination of SageMaker's managed infrastructure and Qwen's strong Chinese-English performance creates an optimal solution for global applications. Start with the 7B model for most use cases, leveraging serverless deployment for low-traffic scenarios and reserved capacity for production scale. Monitor performance through CloudWatch, optimize costs through quantization, and scale confidently knowing your infrastructure adapts to demand. For multilingual chatbots, document analysis, or content generation serving Asian markets, this deployment pattern delivers reliability and cost-efficiency at production scale.

Frequently Asked Questions

How does Qwen compare to Llama for Chinese language tasks?

Qwen significantly outperforms Llama models on Chinese language benchmarks due to its training data composition (40% Chinese vs <5% for Llama). On C-Eval (Chinese knowledge), Qwen 2.5 72B scores 89.5% versus Llama 3.1 70B at 67.3%. For Chinese-English translation, Qwen achieves 35.2 BLEU versus Llama's 28.1. Qwen's tokenizer is optimized for Chinese characters, using 30% fewer tokens than Llama for the same Chinese text, reducing costs and latency. However, Llama 3.1 performs better on English-only tasks and has broader ecosystem support. Choose Qwen for Chinese-primary applications, multilingual Asian markets, or when serving Chinese users. Choose Llama for English-focused use cases or when you need extensive third-party tooling and integrations.

Can I fine-tune Qwen on SageMaker for domain-specific tasks?

Yes, SageMaker supports Qwen fine-tuning via HuggingFace training jobs. Use ml.p4d.24xlarge instances (8x A100 GPUs) for efficient training - Qwen 72B fine-tuning on 10K examples takes 8-12 hours and costs ~$800. Leverage QLoRA for memory-efficient fine-tuning, reducing GPU requirements by 75% (fine-tune on ml.g5.12xlarge with 4x A10 for ~$120). SageMaker provides built-in distributed training, checkpoint management, and experiment tracking. For production fine-tuning pipelines, use SageMaker Pipelines to automate data preprocessing, training, evaluation, and model registration. Alibaba Cloud (Qwen's origin) offers native Qwen fine-tuning with potentially better support, but SageMaker provides superior AWS ecosystem integration for teams already on AWS infrastructure.

What are the cost implications of deploying Qwen 72B versus GPT-4 API?

Deploying Qwen 72B on SageMaker ml.p4d.24xlarge costs $32.77/hour on-demand ($23,918/month 24/7) or $19.68/hour with 1-year RI ($14,370/month). At 1M requests/month averaging 500 tokens each (250 input, 250 output), total tokens: 500M. SageMaker cost: $14,370/month flat. GPT-4 Turbo API cost: 500M tokens × ($0.01/1K input + $0.03/1K output) = $20,000/month. Qwen becomes cheaper above 720K requests/month. However, GPT-4 requires zero infrastructure management, provides instant scaling, and offers superior performance on complex reasoning. Break-even analysis: Use GPT-4 API for <1M requests/month, variable traffic, or when you need absolute best quality. Deploy Qwen on SageMaker for >1M requests/month sustained load, Chinese language focus, or data residency requirements preventing API usage.