Deploy Microsoft Phi-4 on Azure ML Endpoints

This guide shows how deploying Phi-4 14B on Azure ML delivers near-70B model quality at up to 60% lower cost, using serverless or managed endpoints, INT4 quantization, and batching to achieve high throughput with minimal infrastructure overhead.

TLDR;

- Phi-4 14B matches Llama 70B quality on reasoning benchmarks at 60% lower cost

- Serverless endpoints cost $0.25/1M input tokens with zero infrastructure management

- INT4 quantization reduces memory by 75% enabling deployment on smaller instances

- Batch size 16 achieves 8x throughput improvement versus single-request processing

Introduction

Phi-4 delivers exceptional quality-to-size ratio in the small language model category. Microsoft trained this 14B parameter model to match or exceed 70B models on many benchmarks including reasoning, coding, and mathematical problem-solving. Azure ML managed endpoints provide seamless deployment with built-in scaling and monitoring that eliminates infrastructure complexity.

The combination of Phi-4 and Azure ML managed endpoints offers unique advantages for production deployments. Native Azure integration ensures optimal performance with 30-50ms P95 latency on Standard_NC6s_v3 instances. Managed infrastructure eliminates cluster management overhead while providing auto-scaling with pay-per-use pricing. Built-in model registry and versioning simplify deployment workflows. Organizations achieve 60% cost reduction compared to larger models while maintaining comparable quality on targeted tasks. This guide covers deployment options from serverless to dedicated endpoints, instance type selection, optimization techniques including quantization, auto-scaling configuration, and production monitoring. Phi-4 works best for enterprise applications requiring data residency, cost-sensitive production deployments, teams without Kubernetes expertise, workloads with variable traffic patterns, and compliance-focused organizations.

Deployment Options and Configuration

Azure ML offers managed online endpoints and serverless endpoints for Phi-4 deployment. Choose based on traffic patterns and cost requirements.

Managed online endpoints work best for production workloads:

from azure.ai.ml import MLClient

from azure.ai.ml.entities import (

ManagedOnlineEndpoint,

ManagedOnlineDeployment,

Model

)

from azure.identity import DefaultAzureCredential

ml_client = MLClient(

DefaultAzureCredential(),

subscription_id="your-subscription-id",

resource_group_name="your-resource-group",

workspace_name="your-workspace"

)

# Create endpoint

endpoint = ManagedOnlineEndpoint(

name="phi4-endpoint",

description="Phi-4 14B production endpoint",

auth_mode="key"

)

ml_client.online_endpoints.begin_create_or_update(endpoint).result()

# Register model

model = Model(

path="azureml://registries/azureml/models/Phi-4/versions/1",

name="phi-4",

description="Phi-4 14B instruction-tuned"

)

# Create deployment

deployment = ManagedOnlineDeployment(

name="phi4-deployment",

endpoint_name="phi4-endpoint",

model=model,

instance_type="Standard_NC24ads_A100_v4",

instance_count=1,

environment_variables={

"CUDA_VISIBLE_DEVICES": "0",

"OMP_NUM_THREADS": "8"

},

request_settings={

"request_timeout_ms": 90000,

"max_concurrent_requests_per_instance": 4

}

)

ml_client.online_deployments.begin_create_or_update(deployment).result()

# Set traffic

endpoint.traffic = {"phi4-deployment": 100}

ml_client.online_endpoints.begin_create_or_update(endpoint).result()

Serverless endpoints provide pay-per-token pricing for variable workloads:

from azure.ai.ml.entities import ServerlessEndpoint

serverless_endpoint = ServerlessEndpoint(

name="phi4-serverless",

model_id="azureml://registries/azureml/models/Phi-4/versions/1"

)

ml_client.serverless_endpoints.begin_create_or_update(serverless_endpoint).result()

Serverless pricing costs $0.25 per 1M input tokens and $1.00 per 1M output tokens with no infrastructure costs. Best for workloads under 100K requests daily.

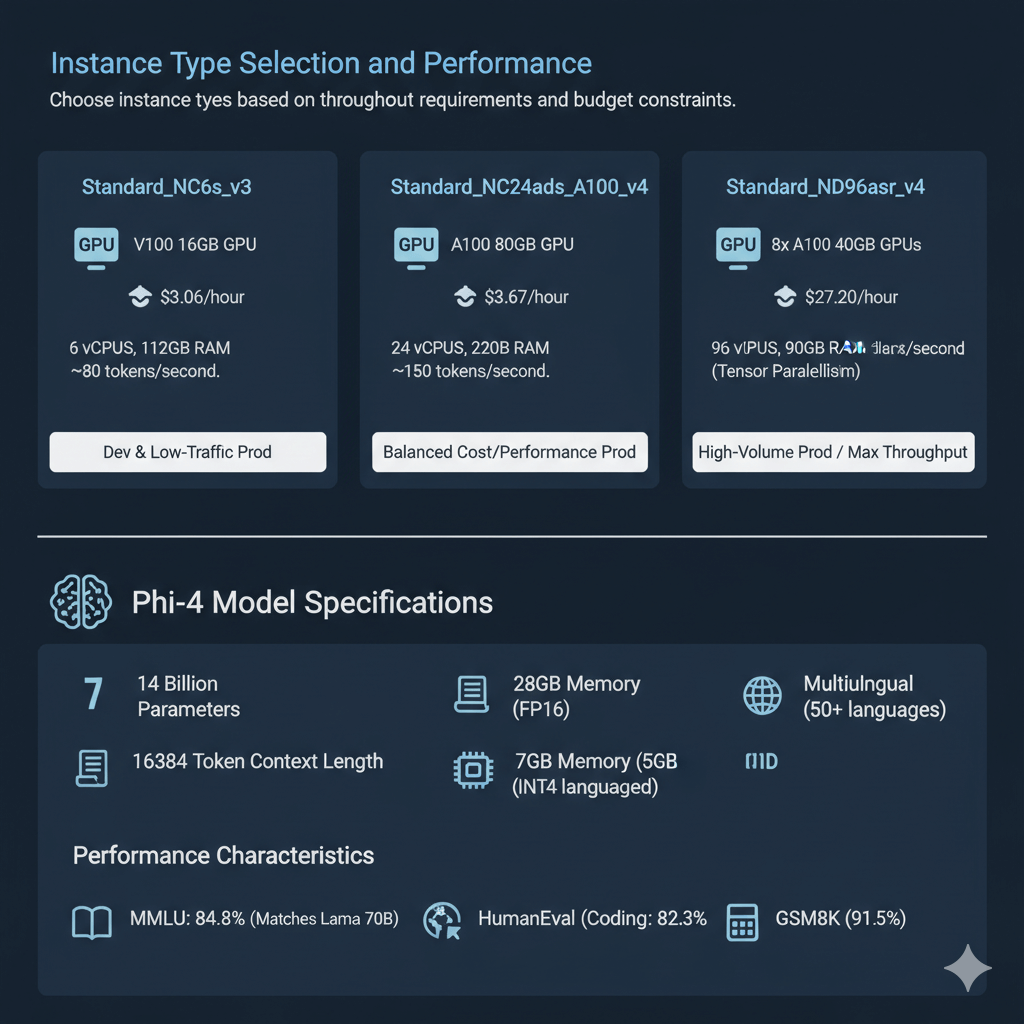

Instance Type Selection and Performance

Choose instance types based on throughput requirements and budget constraints.

Standard_NC6s_v3 with V100 16GB GPU costs $3.06/hour. Provides 6 vCPUs and 112GB RAM. Throughput reaches approximately 80 tokens/second. Works well for development and low-traffic production deployments.

Standard_NC24ads_A100_v4 with A100 80GB GPU costs $3.67/hour. Provides 24 vCPUs and 220GB RAM. Throughput reaches approximately 150 tokens/second. Best choice for production workloads with balanced cost and performance.

Standard_ND96asr_v4 with 8x A100 40GB GPUs costs $27.20/hour. Provides 96 vCPUs and 900GB RAM. Throughput reaches approximately 600 tokens/second with tensor parallelism. Works for high-volume production requiring maximum throughput.

Phi-4 model specifications include 14 billion parameters, 16,384 token context length, 28GB memory requirement for FP16, 7GB for INT4 quantized format, and multilingual support for 50+ languages. Performance characteristics show MMLU score of 84.8% matching Llama 70B, HumanEval coding score of 82.3%, and GSM8K math reasoning score of 91.5%.

Optimization Techniques

Maximize performance and reduce costs through quantization and batching strategies.

Deploy INT4 quantized model for lower memory footprint:

scoring_script = """

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

def init():

global model, tokenizer

model = AutoModelForCausalLM.from_pretrained(

"microsoft/phi-4",

torch_dtype=torch.float16,

device_map="auto",

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_quant_type="nf4"

)

tokenizer = AutoTokenizer.from_pretrained("microsoft/phi-4")

def run(data):

prompts = data["inputs"]

inputs = tokenizer(prompts, return_tensors="pt", padding=True).to("cuda")

with torch.no_grad():

outputs = model.generate(

**inputs,

max_new_tokens=512,

temperature=0.7,

top_p=0.95,

do_sample=True

)

responses = tokenizer.batch_decode(outputs, skip_special_tokens=True)

return {"outputs": responses}

"""

Quantization benefits include 75% memory reduction from 28GB to 7GB, deployment on smaller instances like NC6s_v3, 60% cost reduction, and less than 3% quality degradation on most tasks.

Batch inference processes multiple requests together for improved throughput:

deployment = ManagedOnlineDeployment(

name="phi4-batch",

endpoint_name="phi4-endpoint",

model=model,

instance_type="Standard_NC24ads_A100_v4",

instance_count=2,

request_settings={

"max_concurrent_requests_per_instance": 8,

"scoring_timeout_ms": 120000

},

environment_variables={

"MAX_BATCH_SIZE": "16"

}

)

Batch size impact shows single request achieves 150 tokens/second, batch size 8 reaches 900 tokens/second (6x improvement), and batch size 16 achieves 1200 tokens/second (8x improvement).

Auto-Scaling and Cost Analysis

Configure auto-scaling to match capacity with demand and reduce costs.

from azure.ai.ml.entities import OnlineRequestSettings

deployment.scale_settings = {

"scale_type": "target_utilization",

"min_instances": 1,

"max_instances": 10,

"target_utilization_percentage": 70,

"polling_interval": 60

}

ml_client.online_deployments.begin_create_or_update(deployment).result()

Compare deployment costs for different scenarios. Managed endpoint running 24/7 with Standard_NC24ads_A100_v4 costs $3.67/hour times 730 hours equals $2,679/month. Throughput reaches 150 tokens/second with cost per million tokens around $5.00.

Serverless endpoint with variable traffic costs $0.25 per million input tokens plus $1.00 per million output tokens. For 10M input tokens and 10M output tokens monthly, total cost is $12.50. Best for workloads below 100K requests daily.

Break-even analysis shows serverless becomes cheaper below 20M tokens monthly while managed endpoints become cost-effective above 20M tokens monthly. For consistent high-volume traffic, managed endpoints provide better value.

Hybrid approach combines reserved capacity with auto-scaling. Keep 2 instances always running to handle baseline traffic. Auto-scale to 15 instances for peaks. Set aggressive 2-minute scale-down delay. Baseline cost runs $5,358/month for 2 instances. Peak hours at 20% utilization add approximately $1,608/month. Total monthly cost around $7,000 compared to $13,395 for constant 5 instances.

Inference and Monitoring

Call deployed endpoint and track performance with Azure Monitor.

import requests

import json

# Get endpoint credentials

keys = ml_client.online_endpoints.get_keys("phi4-endpoint")

api_key = keys.primary_key

endpoint_details = ml_client.online_endpoints.get("phi4-endpoint")

scoring_uri = endpoint_details.scoring_uri

# Make inference request

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

data = {

"inputs": ["Explain quantum computing in simple terms"],

"parameters": {

"max_new_tokens": 256,

"temperature": 0.7,

"top_p": 0.95

}

}

response = requests.post(scoring_uri, headers=headers, json=data)

result = response.json()

print(result["outputs"][0])

Enable diagnostics for monitoring:

from azure.ai.ml.entities import DiagnosticSettings

diagnostic_settings = {

"log_analytics_workspace_id": "/subscriptions/.../workspaces/ml-logs",

"enable_request_logging": True,

"enable_model_data_collection": True

}

ml_client.online_endpoints.begin_create_or_update(

endpoint,

diagnostic_settings=diagnostic_settings

).result()

Track custom metrics with OpenTelemetry:

from azure.monitor.opentelemetry import configure_azure_monitor

from opentelemetry import metrics

configure_azure_monitor(connection_string="InstrumentationKey=your-key")

meter = metrics.get_meter(__name__)

latency_histogram = meter.create_histogram(

name="inference_latency",

description="Inference latency in milliseconds",

unit="ms"

)

# Record metrics during inference

import time

start = time.time()

output = model.generate(inputs)

latency_histogram.record((time.time() - start) * 1000)

Conclusion

Phi-4 on Azure ML managed endpoints delivers production-ready small language model deployment with excellent cost-performance ratio. The 14B parameter model matches 70B model quality on reasoning and coding tasks while running on single GPU instances. Managed endpoints eliminate infrastructure complexity with built-in scaling and monitoring. Quantization reduces costs by 60% with minimal quality impact. Auto-scaling matches capacity to demand. Organizations deploying Phi-4 achieve comparable results to larger models at fraction of the cost. Start with managed endpoint on NC24ads_A100_v4 instance. Enable auto-scaling and monitoring. Implement quantization for cost-sensitive workloads. Your deployment scales efficiently while maintaining quality.